Memory in AI agents is broken, but why do developers say that?

Memory in AI agents is broken, but why do developers say that?

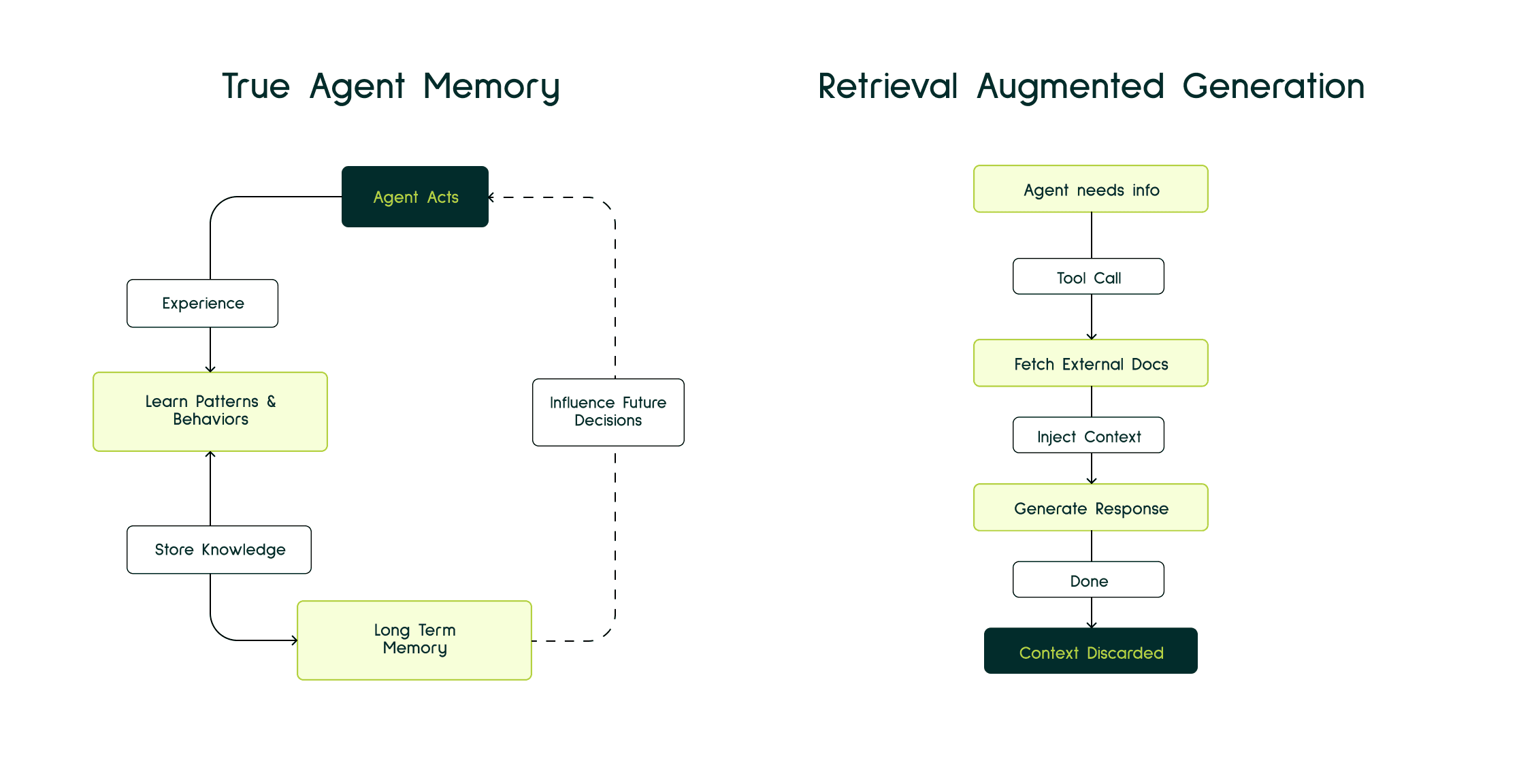

To understand this, we first need to separate memory from RAG, because they’re often confused.

RAG is essentially just-in-time context. When an agent needs information to answer something, it calls a tool, fetches relevant documents, gathers context and that’s it.

Need info → call tool → retrieve context → respond.

Memory on the other hand is different. It’s about learning patterns, behaviors, decisions, and preferences over time.

Why do we need memory ?

If a user explains their preferences once, they shouldn’t have to repeat them in every new conversation.

For example, if you’re a developer who prefers camelCase over snake_case, you don’t want to remind the agent every single time.

Now why do we need memory in coding agents? So that agents are able to understand and retain user-specific patterns and behaviors over time. If an agent solves a problem in a particular way that aligns with the user, it should learn from that interaction. The goal is to not repeatedly extract context and temporarily store it in a scratchpad.

Why is it not solved yet ?

If you use any AI memory tool out there, they are largely the same. Current memory tools are illusion & they follow a predictable pattern:

Extract keywords and stated preferences from conversations

Convert them into embeddings and store them in a vector database

Retrieve them later using similarity search

Inject them back into the prompt as context

So what looks like “memory” is often just prompt augmentation: “User prefers Python. Deadline is Friday.”

They extract entities and relationships using complex prompts. But even then, memory is still treated as something to be stored and retrieved, not something to be learned and integrated.

And here’s the uncomfortable truth: What we call “coding agent memory” today isn’t really memory. It’s a slightly modified search engine.

When you store a “memory,” these systems convert your text into a vector embedding and save it in a vector database. Later, when you ask a question, they compute similarity between your question and those stored vectors.

This is semantic search, not cognitive memory.

When you say “make it production-ready,” you don’t just mean polish it.

You mean:

add proper logging, handle edge cases, implement error handling and avoid leaving print() statements scattered everywhere.

Now imagine you open a new chat.

You ask:

“Set up a production-ready backend for my new project.”

The agent responds:

“I’ll initialize a Node.js server with MongoDB. Also, here’s a Django template you didn’t ask for.”

Great.Thanks for nothing. Completely ignored your stack, your past choices, and your standards.

Current tools inject summaries into the prompt like:

“Here is what you know about the user: prefers Python, working on cloud migration, deadline Friday.”

But they stop.They don’t answer the deeper questions:

Why does the user prefer Python? Was it due to past issues with JavaScript? Team familiarity? Performance constraints?

What failed previously — and why? Was it an incorrect function call? A wrong parameter? A flawed architectural assumption?

How does the deadline shape decisions? Should the agent optimize for speed and pragmatism, or long-term elegance and scalability?

Without integrating this context into reasoning, agents don’t truly learn. They just replay stored notes.

The problem isn’t storage. We have plenty of storage. The real problem is retrieval logic.

But better retrieval doesn’t just come from storing more data. It comes from storing it intelligently. Structured. Contextualized. Connected to outcomes.What we need isn’t bigger hard drives. We need better brains.

Current tools focus on user memory, but agents need to learn:

What worked, successful patterns, what user liked or approved

What failed, which path is a dead end, what you already tried and didn't work.

This isn't just about memory , it's experiences agents had when having a chat about how a user & agent pair programmed . And it's how agents move from tools to partners.

When should memory extraction be triggered, before the agent completes the task, during execution, or after it finishes?

Triggering extraction after execution makes the most sense.

Once the user has completed the conversation, there’s enough context to see the full pattern. When the interaction completes, that gives the agent space to reflect on what actually worked and what didn’t.

Not just shallow notes like “User mentioned Django,” but deeper insights such as “User rejected the ORM suggestion twice and chose to stick with raw SQL.”

What is the solution?

To fix this we have to stop throwing text into a vector database and hoping for the best. We need to shift from Vector similarity to Graph Reasoning.

The Scenario

Last week, the agent generated a report using Django’s .annotate() and .aggregate(). The solution looked clean,but it was slow. The user profiled it, found it was generating 500+ queries (the N+1 problem), and rewrote it using a raw SELECT ... GROUP BY SQL query.The result was significantly faster. The user approved the final implementation.

Instead of sorting a flat list of facts:

Instead of a text blob, the agent stores this chain of events:

ReportingFeature -(attempted)-> DjangoORM

DjangoORM -(resulted_in)-> NPlusOneQueries

NPlusOneQueries -(impacted)-> HighLatency

HighLatency -(resolved_by)-> RawSQL RawSQL -(validated_by)-> UserApproval

This allows for Causal Reasoning. The agent doesn't just "know" you like Python; it understands why you made the choices you made. It knows that because you failed with a monolithic structure last month, you are now prone to microservices.

Memory isn't a storage issue, it's an integration issue.

Recommended Blogs